A Self-Organizing Neuro-Fuzzy Q-Network: Systematic Design with Offline Hybrid Learning

Abstract

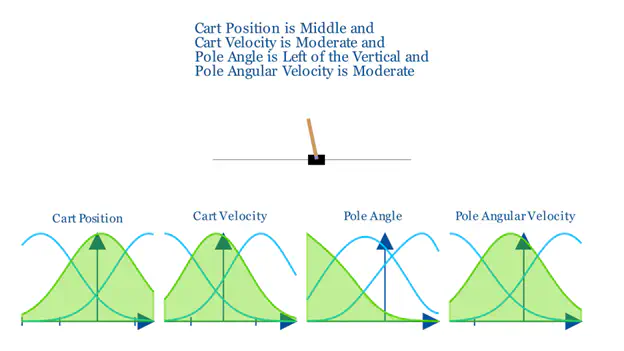

In this paper, we propose a systematic design process for automatically generating self-organizing neuro-fuzzy Q-networks by leveraging unsupervised learning and an offline, model-free fuzzy reinforcement learning algorithm called Fuzzy Conservative Q-learning (FCQL). Our FCQL offers more effective and interpretable policies than deep neural networks, facilitating human-in-the-loop design and explainability. The effectiveness of FCQL is empirically demonstrated in Cart Pole and in an Intelligent Tutoring System that teaches probability principles to real humans.

Type

Publication

In 2023 International Conference on Autonomous Agents and Multiagent Systems